Energy Efficiency in Continuous Dietary Monitoring: Technologies, Applications, and Clinical Validation for Metabolic Research

This article examines the critical role of continuous dietary monitoring in understanding human energy metabolism and its application in clinical research and drug development.

Energy Efficiency in Continuous Dietary Monitoring: Technologies, Applications, and Clinical Validation for Metabolic Research

Abstract

This article examines the critical role of continuous dietary monitoring in understanding human energy metabolism and its application in clinical research and drug development. It explores the foundational science of energy balance, the current landscape of digital and biomarker-based monitoring technologies, and strategies for optimizing data accuracy and patient adherence. A comparative analysis validates the efficacy of these tools for managing metabolic diseases, with a specific focus on their utility in weight management, diabetes research, and the evaluation of emerging therapeutics like GLP-1 receptor agonists. The synthesis provides a roadmap for researchers and drug development professionals to leverage these tools for robust, data-driven nutritional science.

The Science of Energy Balance and the Imperative for Continuous Monitoring

Fundamental Concepts and Definitions

Energy Balance is the state achieved when the energy consumed as food and drink (Energy Intake) equals the total energy expended by the body (Energy Expenditure) over a defined period. A positive energy balance (intake > expenditure) leads to weight gain, while a negative energy balance (intake < expenditure) results in weight loss [1] [2].

Energy Intake (EI) is the total energy provided by the macronutrients (carbohydrates, fats, proteins, and alcohol) consumed in the diet.

Energy Expenditure (EE) is the total energy an individual utilizes each day, comprising several components [3] [2]:

- Resting Energy Expenditure (REE) or Basal Metabolic Rate (BMR): The energy required to maintain fundamental physiological functions at rest. This is the largest component of total daily energy expenditure [2].

- Thermic Effect of Food (TEF) or Diet-Induced Thermogenesis (DIT): The energy cost of digesting, absorbing, and storing consumed nutrients [2].

- Activity Energy Expenditure (AEE): The energy cost of physical activity, which can be further divided into:

- Exercise Activity Thermogenesis (EAT): Energy expended during voluntary exercise.

- Non-Exercise Activity Thermogenesis (NEAT): Energy expended for all other physical activities, such as walking, talking, and fidgeting [2].

Adaptive Thermogenesis (AT) is a physiological adaptation characterized by a change in energy expenditure that is independent of changes in body composition (fat mass and fat-free mass) or physical activity levels. During weight loss, it manifests as a reduction in energy expenditure beyond what is predicted, thereby conserving energy and opposing further weight loss [4].

Key Quantitative Values of Energy Expenditure Components

Table 1: Typical contribution of different components to total daily energy expenditure in sedentary individuals.

| Component of Expenditure | Typical Contribution (%) | Description |

|---|---|---|

| Basal Metabolic Rate (BMR) | 60-75% | Energy for core bodily functions at rest [2] |

| Thermic Effect of Food (TEF) | ~10% | Energy cost of processing food (adjusted for thermic effect) [4] |

| Non-Exercise Activity Thermogenesis (NEAT) | Highly variable | Energy from spontaneous daily activities [2] |

Frequently Asked Questions (FAQs) and Troubleshooting

FAQ 1: In our dietary intervention study, we observe that weight loss plateaus despite maintained caloric restriction. Is this a methodological error or an expected physiological response?

Answer: This is an expected physiological response, largely driven by Adaptive Thermogenesis. As individuals lose weight, their body resists further weight loss through several mechanisms [2] [4]:

- Decreased Resting Energy Expenditure: A reduction in REE occurs that is greater than what would be expected from the loss of fat and fat-free mass alone [4].

- Increased Metabolic Efficiency: The body becomes more efficient at utilizing energy, thereby reducing overall energy expenditure.

- Potential Hormonal Changes: Changes in hormones like leptin and ghrelin can increase hunger and reduce energy expenditure.

Troubleshooting Guide:

- Confirm Phenomenon: Measure REE via indirect calorimetry before and after weight loss and compare it to predicted values based on new body composition to quantify adaptive thermogenesis [4].

- Adjust Energy Prescription: Recognize that a fixed caloric intake will not produce continuous linear weight loss. Energy intake targets may need to be recalculated periodically based on the new, lower energy expenditure.

- Manage Expectations: Inform study participants that weight loss plateaus are a normal part of the process and not necessarily a sign of non-adherence.

FAQ 2: What are the primary sources of measurement error when calculating energy balance in free-living human subjects?

Answer: Accurately measuring all components of energy balance is notoriously challenging. The main sources of error are [5]:

- Energy Intake Measurement: Self-reported dietary intake (e.g., 24-hour recalls, food diaries) is frequently subject to under-reporting and misrepresentation, making it the most unreliable component in energy balance equations.

- Energy Expenditure Measurement: While the Doubly Labeled Water (DLW) method is the gold standard for measuring Total Energy Expenditure (TEE) in free-living individuals, it has limitations. It requires sophisticated equipment for analysis, is expensive, and its accuracy can be influenced by the estimate of the food quotient used in calculations [3] [5].

- Body Composition Measurement: Predictive equations for body composition based on anthropometrics (like skinfold thickness) can have large individual-level errors compared to gold-standard methods like the 4-component model, leading to inaccurate estimates of energy stores [5].

Troubleshooting Guide:

- Mitigate Intake Error: Use multiple, non-consecutive 24-hour recalls administered by trained interviewers. When possible and ethically permissible, provide all food to participants to achieve precise intake data [3] [5].

- Validate Expenditure: Ensure DLW measurements are conducted with strict protocol adherence, including background urine samples and proper dose administration [3].

- Use Gold-Standard Body Comp: For high-precision studies, use DXA or a 4-component model instead of predictive equations to track changes in fat and fat-free mass [3].

FAQ 3: Are there new technologies that can automate and improve the accuracy of dietary intake monitoring?

Answer: Yes, the field of Automatic Dietary Monitoring (ADM) is rapidly evolving to address the limitations of self-report. Emerging technologies include [6]:

- Wearable Bio-Sensors: Devices like the

iEatwrist-worn sensor use bio-impedance to detect unique electrical patterns created by hand-to-mouth gestures and interactions with food and utensils, allowing for recognition of food intake activities and types. - Acoustic Sensors: Wearable microphones (e.g., on the neck) can detect sounds associated with chewing and swallowing.

- Image-Based Methods: Smartphone applications that use photos to identify foods and estimate portion sizes.

Troubleshooting Guide:

- Contextual Limitations: Bio-impedance wearables may struggle to differentiate between foods with similar electrical properties. Acoustic sensors can be affected by ambient noise.

- Validation is Key: Any new ADM technology must be validated against established methods (e.g., weighed food records) in the specific population of interest before use in research [6].

- Multi-Sensor Approach: The highest accuracy may be achieved in the future by combining data from multiple sensors (e.g., inertial measurement units for gesture recognition and acoustic sensors for swallowing detection).

Experimental Protocols for Key Measurements

Protocol 1: Measuring Adaptive Thermogenesis in a Clinical Trial

This protocol is based on a study investigating the effects of probiotics on adaptive thermogenesis during continuous energy restriction [4].

1. Objective: To determine whether an intervention (e.g., probiotic supplementation) attenuates adaptive thermogenesis induced by a hypocaloric diet in adults with obesity.

2. Study Design:

- Type: Randomized, double-blind, placebo-controlled clinical trial.

- Population: Adult males with obesity (BMI 30.0–39.9 kg/m²), weight-stable.

- Intervention:

- Control Group (CERPLA): Continuous Energy Restriction (CER) + Placebo.

- Intervention Group (CERPRO): Continuous Energy Restriction (CER) + Probiotic.

- Dietary Protocol:

- CER set at 30% below Total Daily Energy Expenditure (TDEE).

- TDEE calculated as: [Measured REE × Physical Activity Factor (1.5)] + Thermic Effect of Food (10%) [4].

3. Key Measurements and Methods:

- Resting Energy Expenditure (REE): Measured via indirect calorimetry after an overnight fast at baseline and after the intervention.

- Body Composition: Assessed using DXA or other precise methods at baseline and post-intervention to measure changes in Fat Mass (FM) and Fat-Free Mass (FFM).

- Calculation of Adaptive Thermogenesis:

- Step 1: Predict REE at follow-up using a regression equation derived from baseline data, incorporating the new FFM and FM.

- Step 2: Calculate adaptive thermogenesis as: REE (measured) - REE (predicted).

- A significant negative value (e.g., -129 ± 169 kcal) indicates the presence of adaptive thermogenesis [4].

Protocol 2: Comprehensive Energy Balance Assessment (POWERS Study Model)

This protocol outlines the methodology for a longitudinal study of the weight-reduced state [3].

1. Objective: To understand physiological contributors to weight regain by examining energy intake and expenditure phenotypes before and after behavioral weight loss.

2. Study Design:

- Type: Longitudinal cohort study.

- Population: Adults with obesity (BMI 30-<40 kg/m²).

- Phases:

- Weight Loss: Supervised behavioral intervention until ≥7% weight loss.

- Weight-Reduced State: Follow-up with no intervention support at 4 months (T4) and 12 months (T12).

- Crucial Requirement: Weight stability is required immediately before baseline (pre-weight loss) and post-weight loss (T0) measurement periods.

3. Key Measurements and Timelines: Table 2: Measurement schedule for the POWERS study design [3].

| Measurement | Baseline (BL) | Post-WL (T0) | 4-Month FU (T4) | 12-Month FU (T12) |

|---|---|---|---|---|

| Body Weight & Composition (DXA) | Yes | Yes | Yes | Yes |

| Total Energy Expenditure (DLW) | Yes | Yes | Yes | Yes |

| Resting Energy Expenditure | Yes | Yes | Yes | Yes |

| Energy Intake (Serial DLW) | Yes | Yes | Yes | Yes |

| 24-Hour Food Recalls | Yes | Yes | Yes | Yes |

| Muscle Efficiency | Yes | Yes | Not specified | Not specified |

4. Methodological Details:

- Doubly Labeled Water (DLW) for TEE & EI:

- Participants receive an oral dose of H218O and D2O.

- Urine samples are collected at baseline, over a 14-day period, and analyzed for isotope elimination rates.

- CO2 production is calculated, and TEE is derived using an established equation.

- Energy Intake is calculated as an average over the 14-day period from changes in body energy stores (measured by DXA) plus TEE [3].

- Energy Expenditure Components: Resting Energy Expenditure (REE) is measured by indirect calorimetry. Non-Resting Energy Expenditure (NREE) is calculated as TEE - REE [3].

Essential Research Reagents and Materials

Table 3: Key materials and equipment for energy balance research.

| Item | Function in Research | Example / Specification |

|---|---|---|

| Doubly Labeled Water (DLW) | Gold-standard method for measuring total energy expenditure in free-living individuals over 1-2 weeks [3]. | H218O (10 atom % excess) and D2O (5 atom % excess) [3]. |

| Dual-Energy X-ray Absorptiometry (DXA) | Precisely measures body composition (fat mass, fat-free mass, bone mass) to calculate energy stores [3]. | - |

| Indirect Calorimetry System | Measures resting energy expenditure and substrate utilization by analyzing oxygen consumption and carbon dioxide production [2] [4]. | - |

| Stable Isotope Analyzer | Analyzes isotope enrichment in biological samples (e.g., urine) for DLW studies [3]. | Off-axis integrated cavity output spectroscopy [3]. |

| Bio-impedance Sensor (Research) | Emerging tool for automatic dietary monitoring by detecting gestures and food interactions via electrical impedance [6]. | e.g., iEat wearable with electrodes on each wrist [6]. |

| Current Transformers (CTs) | For industrial energy monitoring; measure electrical current in conductors for facility energy audits [7]. | - |

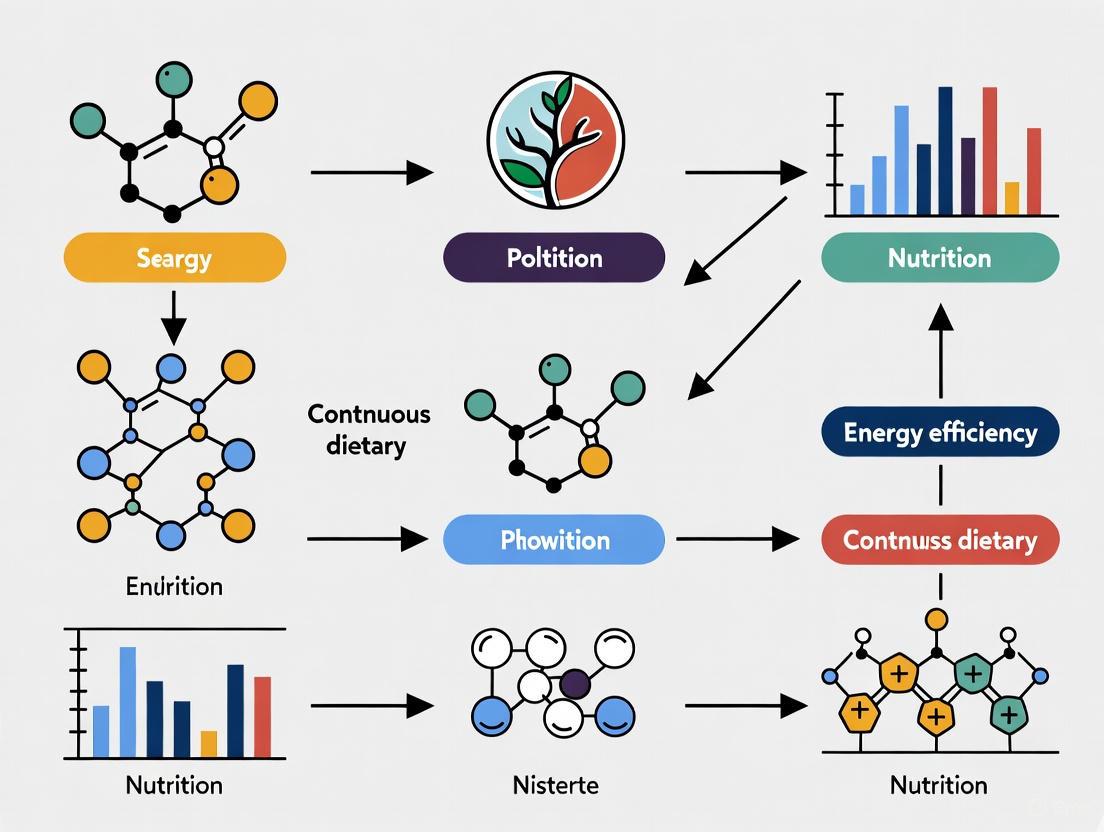

Visualizing Energy Balance and Experimental Workflow

Energy Balance and Adaptive Thermogenesis

Diagram 1: The human energy balance system. A negative energy balance (weight loss) can trigger Adaptive Thermogenesis, which reduces Energy Expenditure, creating a physiological counter-response that favors weight regain.

Adaptive Thermogenesis Measurement Protocol

Diagram 2: Workflow for a clinical trial measuring adaptive thermogenesis. The key calculation involves comparing measured REE post-intervention to the REE predicted from the new body composition.

The Limitations of Traditional Dietary Assessment Methods

Accurate dietary assessment is fundamental to nutrition research, public health monitoring, and the development of evidence-based dietary guidelines [8]. However, traditional methods for measuring dietary intake are notoriously challenging and subject to significant measurement error, which poses a substantial impediment to scientific progress in the fields of obesity and nutrition research [8] [9]. These limitations are particularly critical when investigating energy efficiency and conducting continuous dietary monitoring, as inaccuracies in core intake data can compromise the entire research endeavor. This technical support center outlines the specific limitations of traditional dietary tools, provides troubleshooting guidance for researchers encountering these issues, and details protocols for mitigating error in dietary assessment experiments.

FAQ: Core Concepts for Researchers

Q1: What are the primary categories of traditional dietary assessment methods? Traditional methods can be broadly categorized into retrospective and prospective tools [10]. Retrospective methods, such as 24-hour recalls and Food Frequency Questionnaires (FFQs), rely on a participant's memory of past intake. Prospective methods, primarily food records or diaries, require the participant to record all foods and beverages as they are consumed [8] [10].

Q2: Why is the assessment of energy intake uniquely challenging? Energy intake is tightly regulated by physiological controls, resulting in low between-person variation after accounting for weight and demographic variables [11]. Furthermore, the deviations from energy balance that lead to clinically significant weight changes are very small (on the order of 1-2% of daily intake), meaning that assessment tools require extreme precision to detect these differences, a level of accuracy that current self-report methods typically fail to achieve [11].

Q3: What is the "gold standard" for validating energy intake assessment? The Doubly Labeled Water (DLW) method is considered the reference method for measuring total energy expenditure (TEE) in free-living, weight-stable individuals [12]. It provides an objective measure against which self-reported energy intake can be validated, as in a state of energy balance, intake should equal expenditure [12].

Q4: How does participant burden affect dietary data quality? Participant burden is a major source of error. High burden can lead to non-participation bias, where certain population groups are underrepresented, and a decline in data quality over the recording period [8] [10]. In food records, burden often causes reactivity, where participants alter their usual diet—either by choosing simpler foods or by eating less—because they are required to record it [8] [10].

Troubleshooting Guide: Common Experimental Issues & Solutions

Table 1: Limitations and Methodological Solutions in Dietary Assessment

| Problem Area | Specific Issue | Signs to Detect the Issue | Corrective & Preventive Actions |

|---|---|---|---|

| Measurement Error | Systematic under-reporting of energy intake [12]. | Reported energy intake is significantly lower than Total Energy Expenditure from DLW; implausibly low energy intake relative to body weight [11] [12]. | Use multiple, non-consecutive 24-hour recalls to capture day-to-day variation [8]. Integrate recovery biomarkers (e.g., DLW, urinary nitrogen) to quantify and adjust for measurement error [8] [12]. |

| Participant Reactivity | Participants change diet during monitoring [10]. | A marked decline in reported intake or complexity of foods recorded after the first day of a multi-day food record. | Use unannounced 24-hour recalls to capture intake without prior warning [8]. For food records, include a run-in period to habituate participants to the recording process. |

| Nutrient Variability | High day-to-day variability in nutrient intake obscures habitual intake [8]. | High within-person variation for nutrients like Vitamin A, Vitamin C, and cholesterol, making a few days of records non-representative [8]. | Increase the number of short-term assessments (e.g., multiple 24HRs over different seasons) and use statistical adjustment (e.g., the National Cancer Institute's method) to estimate usual intake [8]. |

| Study Design Complexity | Difficulty defining an appropriate control group in Dietary Clinical Trials (DCTs) [13]. | Lack of a well-formulated placebo for dietary interventions; high collinearity between dietary components [13]. | Carefully match control and intervention groups for key confounders. Use a sham intervention or wait-list control where possible. Account for collinearity in the statistical analysis plan [13]. |

Experimental Protocols for Method Validation

Protocol 1: Controlled Feeding Study for Validation Against True Intake

This protocol validates a dietary assessment method by comparing estimated intake to known, weighed intake under controlled conditions [14].

- Participant Recruitment & Randomization: Recruit a sufficient sample size (e.g., N=150+) representing the target population. Randomize participants to different feeding days or menus to account for order and menu effects [14].

- Food Preparation & Serving: Prepare all meals and beverages in a metabolic kitchen. Weigh all food and beverage items using high-precision digital scales before serving. Use a crossover design where participants consume different controlled meals on separate days [14].

- Dietary Intake Estimation: On the day following the controlled feeding, administer the dietary assessment method being validated (e.g., an automated 24-hour recall like ASA24 or Intake24) [14].

- Data Analysis: Compare the estimated energy and nutrient intakes from the dietary tool to the true, weighed intakes. Calculate the mean difference (bias) and the limits of agreement. Use linear mixed models to assess differences among methods while accounting for repeated measures [14].

Protocol 2: Validation Against the Doubly Labeled Water (DLW) Method

This protocol assesses the validity of self-reported Energy Intake (EI) in free-living individuals [12].

- Baseline Assessment: Measure participant height, weight, and body composition. Collect a baseline urine sample.

- DLW Administration: Administer a dose of doubly labeled water (²H₂¹â¸O) based on the participant's body weight according to standardized equations [12].

- Urine Collection: Collect urine samples over a period of 7-14 days to account for day-to-day variation in physical activity. The specific collection schedule (e.g., daily, on days 1, 7, and 14) depends on the study protocol [12].

- Dietary Assessment: During the DLW measurement period, administer the self-report dietary method(s) under investigation (e.g., 7-day food record, multiple 24-hour recalls) [12].

- Laboratory & Data Analysis: Analyze urine samples for isotopic enrichment to calculate Total Energy Expenditure (TEE). In weight-stable individuals, TEE is equivalent to energy intake. Compare self-reported EI to TEE from DLW to determine the degree of misreporting (under- or over-reporting) [12].

Visualization of Method Selection & Error Pathways

Diagram 1: Dietary Assessment Method Selection

Diagram 2: Error Pathways in Traditional Self-Report

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Tools for Advanced Dietary Assessment Research

| Item | Function & Application | Key Considerations |

|---|---|---|

| Doubly Labeled Water (DLW) | Objective measurement of total energy expenditure in free-living individuals; serves as a reference method for validating self-reported energy intake [12]. | Extremely expensive and requires specialized laboratory equipment for isotopic analysis. Not feasible for large-scale studies. |

| Recovery Biomarkers | Objective biomarkers (e.g., urinary nitrogen for protein, urinary potassium/sodium) used to validate the intake of specific nutrients and quantify measurement error [8]. | Exist for only a limited number of nutrients (energy, protein, sodium, potassium). Collection of 24-hour urine samples can be burdensome. |

| Automated Self-Administered 24-Hour Recall (ASA24) | A web-based tool that automatically administers a 24-hour dietary recall, reducing interviewer burden and cost [8] [14]. | May not be feasible for all study populations (e.g., those with low literacy or limited internet access). |

| Image-Assisted Dietary Assessment | Uses photos of food pre- and post-consumption to aid in portion size estimation and food identification, reducing reliance on memory [14] [9]. | Requires standardization of photography protocols. Potential issues with image quality and participant compliance in capturing images. |

| Wearable Sensors (e.g., iEat) | Emerging technology using bio-impedance or other sensors to automatically detect food intake gestures and potentially identify food types, minimizing participant burden [6]. | Still in developmental stages. Accuracy for nutrient estimation and performance in real-world, unstructured environments needs further validation [6] [9]. |

| 5-(1-Methyl-4-Piperidyl)5H-Dibenzo | 5-(1-Methyl-4-Piperidyl)5H-Dibenzo, CAS:3967-32-6, MF:C21H23NO, MW:305.4 g/mol | Chemical Reagent |

| 3-[4-(Benzyloxy)phenyl]aniline | 3-[4-(Benzyloxy)phenyl]aniline|CAS 400744-34-5 | 3-[4-(Benzyloxy)phenyl]aniline is a biphenyl aniline derivative for research use only. It serves as a key synthetic intermediate. Purity ≥96%. For Research Use Only. Not for human use. |

The Rise of Preventative Medicine and Personalized Nutrition

Technical Support Center: Troubleshooting Guides and FAQs

This technical support center addresses common challenges in continuous dietary monitoring research, with a specific focus on optimizing energy efficiency in experimental protocols. The guidance is designed for researchers, scientists, and drug development professionals.

Frequently Asked Questions (FAQs)

Q1: What are the primary reasons for participant discontinuation in long-term Continuous Glucose Monitoring (CGM) studies, and how can we mitigate them? Participant discomfort and device burden are leading causes of CGM discontinuation [15]. Mitigation requires a multi-pronged approach:

- Device Selection: Choose newer-generation CGM sensors with smaller form factors and improved adhesives to minimize skin irritation and physical burden [15].

- Participant Training: Provide comprehensive education on proper sensor attachment and management. Setting realistic expectations about the initial feeling of wearing a device can improve long-term adherence.

- Data Feedback: Implement protocols to prevent information overload. Instead of providing raw, continuous data streams, use software that offers simplified, actionable insights and summary reports [15].

Q2: Our research app's high energy consumption is limiting data collection periods. What features are the biggest energy drains? Energy consumption in health apps is significantly influenced by specific functionalities. A comparative analysis of popular apps identified key contributors [16].

- High-Impact Features: Frequent push notifications, continuous GPS tracking, and high app complexity (e.g., real-time AI-driven recommendations) are statistically significant drivers of energy use [16].

- Optimization Strategy: To extend battery life, design study protocols that minimize the frequency of location pings and use efficient data syncing schedules (e.g., batch syncing instead of real-time). Where possible, use native app features over cross-platform frameworks, which can be less energy-efficient [16].

Q3: How can we validate the accuracy of digital dietary assessment tools in our trials? Validation is crucial for scientific rigor. The guiding principles for Personalized Nutrition (PN) implementation emphasize using validated diagnostic methods and measures [17].

- Reference Methods: Cross-validate new digital tools (e.g., image-based food recognition apps) against established reference methods, such as doubly labeled water for energy expenditure or weighed food records for intake [18].

- Portion Size Estimation: This is a major source of error. Utilize tools that incorporate reference images or 3D modeling to enhance the accuracy of portion size estimates provided by participants [18].

- Data Quality: Ensure the nutrient database powering your app or software is frequently updated and comprehensive to ensure data quality and relevance [17].

Troubleshooting Common Experimental Challenges

Challenge 1: High Variability in Glycemic Response Data

- Problem: Significant inter-individual variability in postprandial glucose responses makes it difficult to identify clear patterns or effects of interventions [19] [20].

- Solution: Do not rely on glucose data alone. Implement a multi-omics personalization approach. Develop algorithms that integrate CGM data with other individual-specific information such as gut microbiome composition (e.g., abundance of Akkermansia muciniphila), lipid profiles, and dietary habits to better predict and explain responses [19] [20]. The study by Zeevi et al. demonstrated that integrated models significantly outperform predictions based on single parameters.

Challenge 2: Participant Adherence to Personalized Dietary Protocols

- Problem: Participants struggle to adhere to prescribed personalized diets over the 18-week duration of a trial, confounding results [20].

- Solution:

- User-Friendly Tools: Provide participants with an intuitive app that offers clear, easy-to-understand food scores and simple guidance, rather than complex nutritional data [17] [20].

- Gamification and Feedback: Incorporate elements of behavioral science, such as personalized nudges, goal setting, and positive feedback, to maintain engagement [19]. Studies show that self-reported adherence is significantly higher in groups using a structured, app-based personalized program compared to those receiving standard static advice [20].

Challenge 3: Managing and Interpreting Large, Multimodal Datasets

- Problem: Integrating data from CGMs, wearable activity trackers, microbiome sequencing, and food logs creates massive, complex datasets that are challenging to analyze.

- Solution: Leverage advanced computational techniques. Artificial intelligence (AI) and machine learning (ML) models are essential for analyzing this vast amount of data to develop algorithms that can predict an individual's response to a specific food or diet [19] [21]. Cloud-based research platforms, like the one used in the Nutrition for Precision Health (NPH) study, are critical for managing and processing this information [21].

Experimental Protocols for Energy-Efficient Research

Protocol 1: Assessing Energy Consumption of Digital Monitoring Tools

Objective: To quantitatively evaluate and compare the energy efficiency of different mHealth apps and wearable devices used in dietary monitoring research.

Methodology:

- Baseline Measurement: Measure the device's energy consumption (in milliwatt-hours) over a set period while the app is installed but not in active use. This establishes a baseline for background processes [16].

- Feature-Specific Testing: Conduct controlled scenarios that trigger specific app features:

- Logging: Measure energy used while a participant logs a standardized meal.

- Syncing: Measure energy consumed during a data synchronization event.

- GPS/Notifications: Activate location tracking and push notifications and measure the subsequent energy drain [16].

- Data Analysis: Use regression modeling to quantify the impact of each feature on total energy consumption. The model can be structured as:

Energy Consumption = β₀ + βâ‚(Notification Frequency) + β₂(GPS Use) + β₃(App Complexity) + ε[16].

Energy Impact of Common mHealth App Features

| Feature | Impact on Energy Consumption | Optimization Strategy |

|---|---|---|

| Push Notifications | Statistically significant increase (P value = .01) [16] |

Batch notifications; reduce frequency during low-engagement periods. |

| GPS Tracking | Statistically significant increase (P value = .05) [16] |

Use geofencing for location triggers instead of continuous tracking. |

| App Complexity / Real-time AI | Statistically significant increase (P value = .03); can consume up to 30% more energy [16] |

Offload complex processing to cloud servers; optimize algorithms. |

| Background Data Syncing | Can account for up to 40% of total energy use [16] | Schedule syncing for periods of device charging or high battery. |

Protocol 2: A Multi-Parameter Personalized Nutrition Trial

Objective: To evaluate the efficacy of a multi-level personalized dietary program (PDP) on cardiometabolic health outcomes compared to standard dietary advice.

Methodology (Adapted from the ZOE METHOD Study) [20]:

- Participant Characterization: At baseline, collect:

- Genotype: Focus on SNPs relevant to metabolism (e.g., FTO, TCF7L2) [19].

- Phenotype: Measure postprandial glucose and triglyceride responses to standardized test meals [20].

- Microbiome: Analyze gut microbiota composition via 16S rRNA sequencing [19] [20].

- Clinical Biomarkers: HbA1c, LDL-C, TG, body weight, waist circumference.

- Intervention: Randomize participants into two groups:

- PDP Group: Receive dietary advice via an app-based program that integrates all baseline data to generate personalized food scores.

- Control Group: Receive standard, general dietary advice (e.g., USDA Guidelines) [20].

- Duration: 18-week intervention period.

- Endpoint Analysis: Re-measure all clinical biomarkers from baseline. Perform statistical analysis (e.g., ITT analysis) to compare changes between groups.

Key Reagent Solutions for Personalized Nutrition Research

| Research Reagent | Function in Experiment |

|---|---|

| Continuous Glucose Monitor (CGM) | Measures interstitial glucose levels continuously to assess glycemic variability and postprandial responses [19] [15]. |

| Standardized Test Meals | Used in challenge tests to elicit a standardized metabolic response for comparing inter-individual variability [17] [20]. |

| DNA Microarray / SNP Genotyping Kit | Identifies genetic variations (e.g., in FTO, PPARG) that influence an individual's response to nutrients like fats and carbohydrates [19]. |

| 16S rRNA Sequencing Reagents | Profiles the gut microbiome composition to identify bacterial species (e.g., A. muciniphila) associated with dietary fiber metabolism and insulin sensitivity [19]. |

| Multiplex ELISA Kits | Allows simultaneous measurement of multiple cardiometabolic biomarkers from a single serum/plasma sample (e.g., TG, LDL-C, insulin) [20]. |

Visualized Workflows and Pathways

Diagram: Multi-Omics Data Integration in Personalized Nutrition

Diagram: Energy Consumption in mHealth App Ecosystem

Key Biomarkers and Metabolic Parameters for Continuous Tracking

Fundamental Biomarker Classification for Nutritional Research

Categories of Nutritional Biomarkers

In nutritional studies, biomarkers are systematically classified into three primary categories based on their function and the information they provide. This classification is critical for designing experiments and interpreting data related to energy metabolism and dietary exposure [22].

Biomarkers of Exposure serve as objective indicators of nutrient intake, overcoming limitations inherent in self-reported dietary assessments such as recall bias and portion size estimation errors. These include direct measurements of food-derived compounds in biological samples [23]. Example biomarkers in this category include alkylresorcinols (whole-grain intake), proline betaine (citrus exposure), and daidzein (soy intake) [23].

Biomarkers of Status measure the concentration of nutrients in biological fluids or tissues, or the urinary excretion of nutrients and their metabolites. These biomarkers aim to reflect body nutrient stores or the status of tissues most sensitive to depletion. Examples include serum ferritin for iron status and plasma zinc for zinc status [22].

Biomarkers of Function assess the functional consequences of nutrient deficiency or excess, providing greater biological significance than static concentration measurements. These are subdivided into:

- Functional Biochemical Biomarkers: Measure activity of nutrient-dependent enzymes or presence of abnormal metabolites (e.g., erythrocyte transketolase activity for thiamine status) [22].

- Functional Physiological/Behavioral Biomarkers: Assess outcomes related to health status such as immune function, growth, cognition, or response to vaccination [22].

Table 1.1: Classification of Nutritional Biomarkers with Examples

| Biomarker Category | Primary Function | Representative Examples | Sample Type |

|---|---|---|---|

| Exposure | Objective assessment of food/nutrient intake | Alkylresorcinols, Proline betaine, Daidzein | Plasma, Urine |

| Status | Measurement of body nutrient reserves | Nitrogen, Ferritin, Plasma zinc | Urine, Serum, Plasma |

| Function | Assessment of functional consequences | Enzyme activity assays, Immune response, Cognitive tests | Blood, Urine, Functional tests |

Context of Use Framework for Biomarker Validation

The Context of Use (COU) is defined as "a concise description of the biomarker's specified use" and includes both the biomarker category and its intended application in research or development. Establishing a clear COU is essential because it determines the statistical analysis plan, study populations, and acceptable measurement variance [24].

The BEST (Biomarkers, EndpointS, and other Tools) resource categorizes biomarkers as:

- Diagnostic: Identify presence or absence of a condition

- Monitoring: Track disease status or response to intervention

- Pharmacodynamic/Response: Show biological response to therapeutic intervention

- Predictive: Identify likelihood of response to specific treatment

- Prognostic: Identify likelihood of clinical event

- Safety: Indicate likelihood of adverse event

- Susceptibility/Risk: Identify potential for developing condition [24]

For continuous dietary monitoring research, the COU framework ensures biomarkers are validated specifically for their intended application in tracking metabolic parameters, which is fundamental to generating reliable, reproducible data.

Key Metabolic Parameters for Continuous Tracking

Core Metabolic Health Markers

Metabolic health is quantitatively assessed through five primary clinical markers that reflect the body's energy processing efficiency. These markers provide crucial insights into metabolic syndrome risk and overall physiological function [25] [26].

Blood Glucose Levels represent circulating sugar primarily from dietary intake. Maintaining stable levels is critical for metabolic homeostasis. Optimal fasting levels are generally below 100 mg/dL, with variability being as significant as absolute values. Continuous Glucose Monitors (CGMs) enable real-time tracking of glucose fluctuations in response to dietary interventions, physical activity, and sleep patterns [25].

Triglycerides are a form of dietary fat stored in fat tissue and circulating in blood. Elevated levels (>150 mg/dL) correlate with cardiovascular disease risk and metabolic dysfunction. Factors influencing triglyceride levels include high sugar intake, alcohol consumption, and physical inactivity [25] [26].

HDL Cholesterol functions as "good cholesterol" that transports LDL away from arteries. Optimal levels are ≥60 mg/dL, with low values increasing cardiovascular risk. Unlike other markers, higher HDL is generally desirable, influenced by factors including smoking, sedentary behavior, and diet composition [25] [26].

Blood Pressure measures arterial force during heart contraction (systolic) and relaxation (diastolic). Healthy values are at or below 120/80 mmHg. Chronic elevation increases risks for cardiovascular disease, stroke, and vascular dementia. Influential factors include sodium intake, stress management, and physical activity levels [25] [26].

Waist Circumference quantifies abdominal fat deposition, specifically indicating visceral fat surrounding organs. Healthy measurements are <40 inches (men) and <35 inches (non-pregnant women). This marker independently predicts metabolic disease risk beyond overall body weight [25] [26].

Table 2.1: Core Metabolic Health Markers and Their Clinical Ranges

| Metabolic Marker | Optimal Range | Risk Threshold | Primary Significance | Influencing Factors |

|---|---|---|---|---|

| Blood Glucose (Fasting) | <100 mg/dL | ≥100 mg/dL | Energy processing, Diabetes risk | Diet, Exercise, Sleep, Stress |

| Triglycerides | <150 mg/dL | ≥150 mg/dL | Cardiovascular risk | Sugar intake, Alcohol, Activity |

| HDL Cholesterol | ≥60 mg/dL | <40 mg/dL | Reverse cholesterol transport | Smoking, Exercise, Diet |

| Blood Pressure | ≤120/80 mmHg | >130/80 mmHg | Cardiovascular strain | Sodium, Stress, Activity |

| Waist Circumference | <40" (M), <35" (F) | ≥40" (M), ≥35" (F) | Visceral fat accumulation | Diet, Exercise, Genetics |

Advanced Nutritional Biomarkers for Continuous Monitoring

Beyond the core metabolic panel, numerous specialized biomarkers provide targeted information about specific dietary exposures and nutritional status. These biomarkers are particularly valuable for validating dietary interventions and understanding nutrient bioavailability [23].

Food Intake Biomarkers include:

- Alkylresorcinols: Metabolites indicating whole-grain wheat and rye consumption, measurable in plasma [23]

- Proline Betaine: Marker for citrus fruit intake, detectable in urine following consumption [23]

- Daidzein and Genistein: Phytoestrogens indicating soy product consumption, measurable in urine and plasma [23]

- Carotenoids: Plant pigment compounds reflecting fruit and vegetable intake, quantifiable in plasma/serum [23]

Nutrient Status Biomarkers include:

- Homocysteine: Functional biomarker of one-carbon metabolism and folate status [23]

- Erythrocyte Fatty Acid Composition: Status marker for essential fatty acids including EPA and DHA [23]

- Creatinine and Nitrogen: Urinary markers of protein intake and muscle metabolism [23]

These biomarkers enable researchers to objectively verify dietary compliance in intervention studies and correlate specific food exposures with metabolic outcomes, providing advantages over traditional food frequency questionnaires and dietary recalls.

Experimental Protocols for Biomarker Validation

Analytical Validation Methodology

Diagram 1: Analytical Validation Workflow for Biomarker Assays

Analytical validation establishes that a biomarker detection method meets acceptable performance standards for sensitivity, specificity, accuracy, precision, and other relevant characteristics using specified technical protocols [24]. This process validates the technical performance of the assay itself, independent of its clinical usefulness.

Key Steps in Analytical Validation:

- Define Performance Specifications: Establish target values for sensitivity, specificity, accuracy, and precision based on the biomarker's Context of Use [24]

- Protocol Standardization: Develop detailed specimen collection, handling, storage, and analysis procedures to minimize technical variability [22]

- Inter- and Intra-Assay Validation: Determine coefficient of variation across multiple runs and within single assays

- Linearity and Recovery: Establish assay performance across expected physiological range

- Stability Studies: Evaluate biomarker stability under various storage conditions and timeframes

Clinical Validation Methodology

Clinical validation establishes that a biomarker "acceptably identifies, measures, or predicts the concept of interest" for its specified Context of Use [24]. This process evaluates the biomarker's performance and usefulness as a decision-making tool.

Clinical Validation Protocol Components:

- Study Population Selection: Recruit participants representing the intended use population, considering age, sex, health status, and relevant comorbidities [24]

- Reference Standard Comparison: Compare biomarker performance against accepted reference standards (clinical outcome assessments, established diagnostic tools, or postmortem pathology) [24]

- Longitudinal Assessment: For monitoring biomarkers, implement repeated measures over time to establish relationship to disease progression or intervention response [24]

- Confounding Factor Documentation: Record medications, supplements, hormonal status, physical activity, health status, and other factors that may influence biomarker levels [22]

- Statistical Analysis Plan: Develop analysis strategy aligned with Context of Use, including determination of effect sizes, confidence intervals, and decision thresholds [24]

Troubleshooting Guides and FAQs

Common Experimental Challenges and Solutions

Issue: High Intra-Individual Variability in Biomarker Measurements

- Potential Causes: Diurnal variation, recent dietary intake, inconsistent fasting status, biological rhythms [22] [27]

- Solutions: Standardize collection times across participants, implement controlled fasting protocols, collect multiple samples over time, account for menstrual cycle phases in female participants [22]

Issue: Inconsistency Between Self-Reported Intake and Biomarker Data

- Potential Causes: Underreporting in dietary assessments, limitations in food composition tables, inter-individual differences in nutrient bioavailability [23]

- Solutions: Use multiple dietary assessment methods, include biomarker measurements to validate intake data, account for food preparation and processing methods that affect nutrient bioavailability [23]

Issue: Influence of Inflammation on Nutritional Biomarkers

- Potential Causes: Acute infection, inflammatory disorders, obesity, recent physical trauma triggering acute-phase response [22]

- Solutions: Measure C-reactive protein (CRP) and alpha-1-acid glycoprotein (AGP) to detect inflammation, apply statistical correction methods (e.g., BRINDA), exclude participants with active inflammatory conditions [22]

Issue: Discrepancy Between Different Sample Types (e.g., Plasma vs. Urine)

- Potential Causes: Different elimination kinetics, varying metabolic pathways, compartmental distribution differences

- Solutions: Establish sample-type specific reference ranges, understand pharmacokinetic profiles of target biomarkers, standardize sample type across study [28]

Frequently Asked Questions

Q: How often should biomarker measurements be repeated in continuous monitoring studies? A: Measurement frequency depends on the biomarker's biological half-life and physiological variability. Short-lived biomarkers (e.g., glucose) may require continuous or daily monitoring, while stable biomarkers (e.g., HbA1c) may only need monthly assessment. Consider the research question, biomarker kinetics, and practical constraints when determining frequency [22] [27].

Q: What is the difference between 'normal' ranges and 'optimal' ranges for biomarkers? A: 'Normal' ranges are statistical constructs derived from population data, typically representing the 95% central interval of a reference population. 'Optimal' ranges represent values associated with the lowest disease risk and best health outcomes, which often fall within narrower windows than general population norms [27].

Q: When should liquid biopsies versus tissue biopsies be used for biomarker assessment? A: Liquid biopsies are less invasive and provide systemic information but may have lower sensitivity for detecting certain biomarkers, particularly with low disease burden or specific alteration types. Tissue biopsies remain the gold standard for initial diagnosis and can detect histological changes, but carry higher procedural risks. The choice depends on the specific biomarkers, clinical context, and research objectives [28].

Q: How can researchers address the energy efficiency requirements of continuous monitoring systems? A: Implement IoT-based ecosystems with ultra-low consumption sensors, optimize data transmission protocols to minimize power use, utilize edge processing to reduce continuous data streaming, and select monitoring equipment with high energy efficiency ratings [29].

Q: What are the key considerations for selecting biomarkers for nutritional intervention studies? A: Choose biomarkers with appropriate responsiveness to the intervention timeframe, established analytical validity, relevance to the biological pathways being modified, practical measurement requirements, and well-characterized confounding factors. Combining exposure, status, and functional biomarkers provides comprehensive assessment [23] [22].

Research Reagent Solutions and Essential Materials

Table 5.1: Essential Research Materials for Biomarker Analysis

| Reagent/Material | Function/Application | Technical Considerations |

|---|---|---|

| Next-Generation Sequencing (NGS) Kits | Comprehensive genomic biomarker testing for multiple targets simultaneously | Should include DNA and RNA sequencing capabilities; essential for detecting fusions/rearrangements [28] |

| Liquid Biopsy Collection Systems | Non-invasive sampling for circulating biomarkers | Balance between sensitivity and specificity; optimal for point mutations but may miss complex alterations [28] |

| Continuous Glucose Monitoring Systems | Real-time tracking of glucose dynamics | Provide continuous data on glucose variability and responses to interventions; superior to single-point measurements [25] |

| Standard Reference Materials | Quality control and assay calibration | Certified reference materials with known biomarker concentrations essential for analytical validation [24] |

| Stabilization Buffers/Preservatives | Maintain biomarker integrity during storage/transport | Specific to biomarker type (e.g., protease inhibitors for protein biomarkers, RNAlater for RNA) [22] |

| Immunoassay Reagents | Quantification of protein biomarkers | Include appropriate antibodies with demonstrated specificity; consider cross-reactivity potential [27] |

| Mass Spectrometry Standards | Absolute quantification of metabolites | Isotope-labeled internal standards for precise measurement of small molecules and metabolites [23] |

Energy-Efficient Monitoring Technologies

Implementing continuous biomarker monitoring requires careful consideration of energy requirements, particularly for long-term studies and remote monitoring applications [29].

IoT-Based Monitoring Ecosystems enable real-time data collection while optimizing power consumption through:

- Ultra-low consumption sensors for temperature, humidity, and environmental monitoring [29]

- Configurable data transmission intervals to balance temporal resolution with battery life [29]

- Edge processing capabilities to reduce continuous data streaming requirements [29]

System Architecture Considerations for energy-efficient monitoring:

- Sensor Selection: Choose sensors with appropriate power requirements for the monitoring context

- Data Transmission: Utilize low-power communication protocols (e.g., LoRaWAN, Bluetooth LE)

- Power Sourcing: Evaluate battery, solar, or wired power options based on deployment location and duration

- Data Processing: Implement algorithms to minimize redundant data collection and transmission

Diagram 2: Energy-Efficient Data Flow for Continuous Monitoring Systems

Linking Dietary Patterns to Energy Metabolism and Chronic Disease

## Foundational Knowledge: Dietary Patterns and Health

This section provides evidence-based summaries of dietary patterns relevant to metabolic health and chronic disease prevention.

What does the current evidence say about the effectiveness of major dietary patterns for Metabolic Syndrome (MetS)?

A 2025 network meta-analysis of 26 randomized controlled trials provides a direct comparison of six dietary patterns for managing MetS. The key findings on their effectiveness for specific outcomes are summarized in the table below [30].

Table 1: Ranking of Dietary Patterns for Specific Metabolic Syndrome Components

| Metabolic Component | Most Effective Diet | Key Comparative Finding |

|---|---|---|

| Reducing Waist Circumference | Vegan Diet | Best for reducing waist circumference and increasing HDL-C levels [30]. |

| Lowering Blood Pressure | Ketogenic Diet | Highly effective in reducing both systolic and diastolic blood pressure [30]. |

| Lowering Triglycerides (TG) | Ketogenic Diet | Highly effective for triglyceride reduction [30]. |

| Regulating Fasting Blood Glucose | Mediterranean Diet | Highly effective in controlling fasting blood glucose [30]. |

| Increasing HDL-C | Vegan Diet | Ranked as the best choice for increasing "good" cholesterol [30]. |

What are the core features of the Mediterranean, DASH, and Plant-Predominant diets?

Table 2: Appraisal of Common Dietary Patterns for Chronic Disease

| Dietary Pattern | Core Features | Evidence Summary for Chronic Disease | Practical Considerations |

|---|---|---|---|

| Mediterranean Diet | High in fruits, vegetables, whole grains, olive oil, nuts, legumes; moderate fish/poultry; low red meat [31] [32]. | Strong evidence for cardiovascular risk reduction, anti-inflammatory and antioxidant effects [31] [32]. | Flexible and adaptable to cultural preferences; can use frozen produce for budget [31]. |

| DASH Diet | Emphasizes fruits, vegetables, whole grains, lean protein; rich in potassium (4,700 mg/day); limits sodium (1,500 mg/day) [31] [30]. | Significant improvements in blood pressure, metabolic syndrome, and lipid profiles [31] [30]. | Contraindicated for patients with kidney disease due to high potassium; budget-friendly with staples like beans/oatmeal [31]. |

| Plant-Predominant Diets | Spectrum from vegetarian (no meat) to vegan (no animal products) [31]. | Reduced cholesterol, blood pressure, and risk of certain cancers [31]. | Risk of iron deficiency; focus on whole foods (beans, lentils, greens) over processed alternatives [31]. |

## The Scientist's Toolkit: Dietary Monitoring Technologies

This section details the methodologies and tools for objective dietary intake monitoring, a cornerstone of energy efficiency in nutrition research.

What are the key technology-assisted methods for dietary assessment, and how accurate are they?

A 2022 randomized crossover feeding study compared the accuracy of four technology-assisted 24-hour dietary recall (24HR) methods against objectively measured true intake [14].

Table 3: Accuracy of Technology-Assisted Dietary Assessment Methods

| Assessment Method | Description | Mean Difference in Energy Estimation vs. True Intake | Key Findings |

|---|---|---|---|

| ASA24 | Automated Self-Administered Dietary Assessment Tool [14]. | +5.4% [14] | Estimated average intake with reasonable validity [14]. |

| Intake24 | An online 24-hour dietary recall system [14]. | +1.7% [14] | Most accurate for estimating the distribution of energy and protein intake in the population [14]. |

| mFR-TA | Mobile Food Record analyzed by a trained analyst [14]. | +1.3% [14] | Estimated average intake with reasonable validity [14]. |

| IA-24HR | Interviewer-administered recall using images from a mobile Food Record app [14]. | +15.0% [14] | Overestimated energy intake significantly [14]. |

| Glycidyl caprate | Glycidyl caprate, CAS:26411-50-7, MF:C13H24O3, MW:228.33 g/mol | Chemical Reagent | Bench Chemicals |

| 1,4-Bis(4-bromophenyl)-1,4-butanedione | 1,4-Bis(4-bromophenyl)-1,4-butanedione, CAS:2461-83-8, MF:C16H12Br2O2, MW:396.07 g/mol | Chemical Reagent | Bench Chemicals |

What are the emerging sensor-based methods for Automatic Dietary Monitoring (ADM)?

Beyond traditional recalls, research is focused on automated, sensor-based systems to reduce user burden and improve accuracy [33] [6].

- Vision-Based Methods: Use cameras and computer vision for food recognition, portion size estimation, and intake action detection. Challenges include occlusion, privacy concerns, and variable computational efficiency [33].

- Wearable Bio-Impedance Sensors: Systems like iEat use wrist-worn electrodes to measure impedance changes caused by dynamic circuits formed between the body, utensils, and food during eating. This method can recognize intake activities (e.g., cutting, drinking) and classify food types [6].

- Other Wearables & Smart Objects: These include smart utensils with inertial measurement units (IMUs), neckbands with microphones to detect chewing/swallowing, and smart trays with pressure sensors [33] [6].

Diagram 1: Dietary assessment workflow.

## Experimental Protocols & Methodologies

This section provides detailed protocols for key experiments and methodologies cited in this field.

Detailed Protocol: Controlled Feeding Study for Validating Dietary Assessment Tools

This protocol is based on the design used to generate the data in Table 3 [14].

- Objective: To compare the accuracy of energy and nutrient intake estimation of multiple technology-assisted dietary assessment methods relative to true intake in a controlled setting.

- Design: Randomized crossover feeding study.

- Participants: Recruit ~150 participants, representing a mix of genders, with mean age around 32 and mean BMI around 26 kg/m² [14].

- Feeding Day:

- Randomize participants to one of several separate feeding days.

- Provide participants with standardized breakfast, lunch, and dinner.

- Weigh all foods and beverages provided and any leftovers unobtrusively to establish the "true intake" with high precision [14].

- Dietary Recall:

- On the day following the feeding day, participants are randomized to complete a 24-hour dietary recall using one of the technology-assisted methods under investigation (e.g., ASA24, Intake24, mFR-TA, IA-24HR) [14].

- Data Analysis:

- Calculate the difference between the true intake (from weighed food) and the estimated intake (from the recall tool) for energy and multiple nutrients.

- Use linear mixed models to assess the statistical significance of the differences among methods.

- Report mean differences, confidence intervals, and variances [14].

Detailed Protocol: Wearable Bio-Impedance for Dietary Activity Monitoring (iEat System)

This protocol outlines the methodology for using wearable impedance sensors, as described in the iEat study [6].

- Objective: To automatically detect food intake activities and classify food types using a wrist-worn bio-impedance sensor.

- Hardware Setup:

- Use a wearable device with a single bio-impedance sensing channel.

- Attach one electrode to each wrist of the participant. A two-electrode configuration is sufficient for detecting dynamic impedance changes [6].

- Data Collection:

- Conduct experiments in a realistic, everyday table-dining environment.

- Participants consume multiple meals, performing activities like cutting, drinking, and eating with utensils or hands.

- The impedance sensor continuously measures the electrical impedance across the body. Dynamic circuits formed between hands, utensils, food, and the mouth cause unique temporal patterns in the impedance signal [6].

- Signal Processing and Modeling:

- The system relies on the variation in impedance signals, not absolute values.

- An abstracted human-food interaction circuit model is used to interpret the signal changes.

- A lightweight, user-independent neural network model is trained to classify the signal patterns into specific activities (cutting, drinking, etc.) and food types [6].

- Performance Evaluation:

- Evaluate the system using metrics like macro F1 score for activity recognition and food classification on a dataset of multiple meals from numerous volunteers [6].

## Research Reagent Solutions

Table 4: Essential Tools for Dietary Monitoring Research

| Item / Solution | Function in Research | Example / Note |

|---|---|---|

| Automated 24HR Systems | Enable scalable, self-administered dietary data collection for population surveillance. | ASA24, Intake24 [14]. |

| Wrist-Worn Bio-Impedance Sensor | Detects dietary activities and food types via dynamic electrical circuit changes caused by body-food-utensil interactions. | The iEat wearable system [6]. |

| Neck-Worn Acoustic Sensor | Monitors ingestion sounds (chewing, swallowing) for intake detection and food recognition. | AutoDietary system [6]. |

| Smart Utensils & Objects | Directly monitor interaction with food via integrated sensors (e.g., IMU, pressure). | Smart forks (IMU), smart dining trays (pressure sensors) [33] [6]. |

| Computer Vision Algorithms | Automate food recognition, segmentation, and volume estimation from images or video. | Used in Vision-Based Dietary Assessment (VBDA); requires RGB or depth-sensing cameras [33]. |

| Professional Diet Analysis Software | Analyze nutrient composition of recipes, menus, and diets for clinical or research purposes. | Nutritionist Pro software [34]. |

Diagram 2: Bio-impedance sensing principle.

## Frequently Asked Questions (FAQs)

Q1: In our research on Metabolic Syndrome, which dietary pattern should we recommend as an intervention for the best overall outcomes?

Based on a recent network meta-analysis, the Vegan, Ketogenic, and Mediterranean diets show more pronounced effects for ameliorating MetS, but the choice depends on the primary outcome [30]. For overall profile improvement, a Mediterranean diet is a strong candidate due to its proven benefits in regulating blood glucose, improving lipid profiles, and reducing cardiovascular risk through anti-inflammatory and antioxidant mechanisms [30] [32]. The best diet is ultimately the one participants can adhere to long-term, considering cultural, personal, and socioeconomic factors [31].

Q2: Our validation study found significant over-reporting of energy intake with image-assisted interviewer recalls. What might be the cause, and what are more accurate alternatives?

Your finding is consistent with controlled validation studies. Research shows the Image-Assisted Interviewer-Administered 24HR (IA-24HR) method can overestimate energy intake by approximately 15% compared to true, weighed intake [14]. This could be due to participant or interviewer bias in describing portion sizes even with image aids. For more accurate population-level averages, consider Intake24, ASA24, or the mobile Food Record analyzed by a trained analyst (mFR-TA), which showed mean differences of only +1.7%, +5.4%, and +1.3% respectively [14]. If estimating the distribution of intake in your population is important, Intake24 was found to be the most accurate for that specific task [14].

Q3: We are developing a wearable for dietary monitoring. What is an emerging sensing modality that moves beyond traditional inertial measurement units (IMUs)?

An emerging and promising modality is bio-impedance sensing. Systems like iEat use electrodes on each wrist to measure impedance changes created by dynamic circuits formed between the body, metal utensils, and conductive food items during eating activities [6]. This method can recognize specific activities (e.g., cutting, drinking) and classify food types based on unique temporal signal patterns, offering a novel approach that is different from gesture-based IMU recognition [6].

Q4: What are the major practical challenges and sources of bias when applying vision-based dietary monitoring in free-living conditions?

Key challenges include [33]:

- Occlusion: Food items often obscure each other, making identification and volume estimation difficult.

- Privacy: Continuous video or image capture raises significant user privacy concerns.

- Variable Image Quality: Performance is highly dependent on lighting conditions and camera stability.

- Computational Efficiency: Running complex food recognition algorithms in real-time on mobile devices is challenging.

- Practicality: Requiring users to capture images of every meal can be burdensome and lead to non-adherence.

Digital and Biomarker Tools for Precision Dietary Data Collection

Commercial Continuous Glucose Monitors (CGMs) for Metabolic Research

FAQs: Utilizing CGMs in Research Settings

Q1: How can CGMs be applied in research involving healthy adult populations? CGM systems are valuable tools for investigating glucose dynamics in response to various stimuli in healthy adults. Research applications include studying the metabolic impact of nutritional interventions, understanding the effects of physical activity on glucose regulation, examining the relationship between psychological stress and glucose levels, and establishing normative glucose profiles for different demographics. Key metrics often analyzed include Time in Range (TIR), mean 24-hour glucose, and glycemic variability indices [35].

Q2: What are the primary technical challenges when using CGMs in experiments? Common technical issues researchers may encounter include:

- Signal Loss: The receiver or smart device is too far from the transmitter, or physical barriers (walls, metal) disrupt the Bluetooth connection [36] [37].

- Sensor Failure: Sensors can fail due to applicator malfunction, accidental knocks, or expiration of the sensor session (typically 10-14 days for most models) [36] [37].

- Skin Irritation: Adhesives can cause skin reactions, potentially affecting participant compliance [36].

- Data Gaps: Temporary "Brief Sensor Issue" or "No Readings" alerts can create interruptions in the continuous data stream [37].

Q3: What methodologies ensure data accuracy in CGM-based studies? To ensure data quality, researchers should:

- Strictly follow manufacturer instructions for sensor application [36].

- Clean the application site with an alcohol wipe to ensure proper adhesion [36].

- Be aware of the inherent time delay (typically a few minutes) between blood glucose and interstitial fluid glucose readings, especially during periods of rapidly changing glucose levels [35].

- Use blood glucose meter (BGM) measurements to verify CGM readings when values are questionable or do not match clinical symptoms [37].

Q4: How is CGM data interpreted for participants without diabetes? Studies establishing normative values show that healthy, nondiabetic, normal-weight individuals typically spend about 96% of their time in a glucose range of 70 to 140 mg/dL. The mean 24-hour glucose for these populations is approximately 99 ± 7 mg/dL (5.5 ± 0.4 mmol/L) [35]. Deviations from these baselines, such as increased time above 140 mg/dL, can be a focus of research [35].

Troubleshooting Guides

Signal Loss and Connectivity Issues

A Signal Loss alert means the display device is not receiving data from the sensor.

- Potential Cause 1: Excessive distance or physical obstacles between the sensor and receiver/smart device.

- Resolution: Ensure the devices are within 6 meters of each other without obstructive barriers. Keep the display device's Bluetooth enabled and the Dexcom app (or other manufacturer app) open [37].

- Potential Cause 2: Low battery or power-saving mode on the smart device.

- Potential Cause 3: Water on or around the sensor.

- Resolution: While most sensors are water-resistant, water can temporarily disrupt signal transmission. Dry the area thoroughly [36].

Sensor Application and Failure

- Potential Cause 1: Improper sensor application or applicator malfunction.

- Resolution: Do not reuse a sensor. For applicator malfunctions, contact the manufacturer for a replacement and return the faulty unit if requested [36].

- Potential Cause 2: Sensor becomes dislodged.

- Resolution: Ensure the skin is clean, dry, and free of lotions before application. For studies where activity may loosen sensors, consider using approved liquid adhesives or overlay patches to improve retention [36].

- Potential Cause 3: Sensor session has ended or transmitter battery is depleted.

- Resolution: Sensor sessions are finite (e.g., 10 days for Dexcom G6). Transmitters also have a lifespan (e.g., ~3 months for Dexcom G6). Adhere to manufacturer-specified lifetimes and replace components as needed [37].

The table below summarizes key normative CGM data for healthy, non-diabetic adults, which can serve as a baseline for research comparisons [35].

Table 1: Normative CGM Metrics for Healthy, Non-Diabetic Adults

| Metric | Average Value | Significance in Research |

|---|---|---|

| Time in Range (TIR)(70-140 mg/dL) | 96% | Primary endpoint for assessing glycemic stability; a decrease may indicate impaired glucose regulation. |

| Mean 24-h Glucose | 99 ± 7 mg/dL(5.5 ± 0.4 mmol/L) | Provides a central tendency measure for overall glucose exposure. |

| Time <70 mg/dL(Hypoglycemia) | ~15 minutes per day | Establishes a baseline for normal, brief fluctuations into low glucose levels. |

| Time >140 mg/dL(Hyperglycemia) | ~30 minutes per day | Establishes a baseline for normal, brief postprandial spikes. |

Experimental Protocols

Protocol: Assessing Glycemic Response to Nutritional Interventions

Objective: To evaluate the impact of specific foods or meals on postprandial glucose dynamics in a research cohort.

Materials:

- Commercial CGM system (e.g., Dexcom G7, FreeStyle Libre 3)

- Standardized test meals

- Blood glucose meter (BGM) for calibration/validation

- Data collection platform (e.g., smartphone app, manufacturer's cloud software)

Methodology:

- Baseline Period: Record a minimum of 24 hours of CGM data under the participant's habitual diet to establish a personal baseline.

- Test Meal Administration: After an overnight fast, participants consume the standardized test meal within a fixed time window (e.g., 15 minutes).

- Data Recording: CGM data is collected continuously for a minimum of 2 hours postprandially. Participants should log meal start time and any symptoms.

- Activity Control: Participants should remain sedentary during the 2-hour postprandial observation period.

- Data Analysis: Calculate the glucose Area Under the Curve (AUC), peak glucose value, and time to peak for the test meal. Compare these metrics against the baseline or between different test meals [35] [38].

Protocol: Evaluating the Effect of Physical Activity on Glucose Stability

Objective: To quantify the impact of different exercise modalities (e.g., HIIT vs. steady-state cardio) on glycemic control.

Materials:

- CGM system

- Heart rate monitor or fitness tracker

- Equipment for prescribed exercise (treadmill, stationary bike, etc.)

Methodology:

- Pre-Exercise Baseline: Ensure participants begin the exercise session with stable glucose levels (not in a hypoglycemic or hyperglycemic state).

- Exercise Intervention: Participants perform a prescribed exercise protocol at a specific time of day (e.g., post-absorptive state or postprandial).

- Real-Time Monitoring: Record CGM and heart rate data throughout the exercise session and during the recovery period (e.g., 24 hours).

- Data Analysis: Analyze the change in glucose levels during and immediately after exercise. Assess the duration and magnitude of any post-exercise hypoglycemic events and observe the effect on overall 24-hour glycemic variability [35] [38].

Research Reagent Solutions

Table 2: Essential Materials for CGM-Based Metabolic Research

| Item | Function in Research |

|---|---|

| Commercial CGM System(e.g., Dexcom G7, Abbott Libre 3) | Core device for continuous, ambulatory measurement of interstitial glucose levels. Provides the primary data stream for analysis [35] [38]. |

| Skin Adhesive & Barrier Wipes(e.g., Liquid adhesive, barrier films) | Ensures sensor retention for the entire study duration and manages skin reactions to preserve participant compliance and data integrity [36]. |

| Blood Glucose Meter (BGM) & Test Strips | Provides fingerstick capillary blood samples for validation of CGM readings during critical time points or when CGM values are questionable [37]. |

| Data Visualization & Analysis Software(e.g., Manufacturer cloud platforms, R, Python) | Enables aggregation, visualization, and statistical analysis of CGM data (e.g., TIR, AUC, glycemic variability) [35]. |

| Standardized Test Meals | Provides a controlled nutritional stimulus for studying postprandial glucose metabolism and allows for comparison across participants and studies [35] [38]. |

Experimental Workflow and Data Interpretation

The following diagram illustrates a generalized workflow for a CGM-based metabolic study, from participant screening to data interpretation.

CGM Research Workflow

Signaling Pathways in Glucose Regulation

The diagram below outlines the core physiological pathways that govern blood glucose levels, which are the foundation for interpreting CGM data.

Glucose Homeostasis Pathways

Mobile Applications for Diet Self-Monitoring and Nutrient Analysis

Troubleshooting Guides and FAQs

Q1: Our research participants are underreporting nutrient intake, particularly sodium and calcium, when using mobile diet apps. What methodologies can improve data accuracy?

A: Underreporting is a common challenge. A pre-post intervention study using the Diet-A mobile application demonstrated significant decreases in reported sodium (p=0.04) and calcium (p=0.03) intake, suggesting systematic underreporting rather than actual dietary change [39]. To improve accuracy:

- Implement 24-hour recall validation: Collect data using 24-hour dietary recalls pre- and post-intervention to establish baseline accuracy metrics and identify reporting patterns [39].

- Multi-modal data entry: Enable both voice and text input to reduce recording burden, which was cited by >70% of participants as a significant barrier to consistent logging [39].

- Strategic prompting: Use timed pop-up notifications at logical intervals (e.g., 11:00 AM for breakfast, 3:00 PM for lunch, 8:00 PM for dinner) to prompt meal recording without relying on participant memory [39].

- Photographic reinforcement: Include functionality to take food photos as memory aids for later data entry when immediate logging isn't feasible [39].

Q2: How can we maintain participant engagement with diet tracking applications throughout long-term studies to prevent data attrition?

A: Maintaining engagement requires addressing both technical and behavioral factors:

- Reduce recording burden: Implement voice-to-text functionality and simplified portion size selection (e.g., proportion of pre-specified serving sizes) rather than requiring manual weight/volume entries [39].

- Provide immediate feedback: Develop systems that display real-time nutrient intake comparisons to dietary reference standards, giving participants personal insights that reinforce continued use [39].

- Minimize user effort: Utilize barcode scanners and extensive food databases to simplify food logging [40].

- Address participant mindset: In studies using CGM integration, some participants reported that continuous monitoring amplified negative feelings about food, highlighting the need for psychological support components in prolonged interventions [41].

Q3: What integration methods exist between continuous glucose monitoring (CGM) systems and dietary self-monitoring applications to optimize energy efficiency in data collection?

A: Effective CGM-diet integration can create more energy-efficient data collection paradigms by reducing participant burden and automating data capture:

- Real-time feedback systems: Research shows that integrating CGM data with nutrition therapy significantly increased whole-grain (p=0.02) and plant-based protein intake (p=0.02) while improving sleep efficiency by 5% (p=0.02) through automated data synthesis [42].

- Standardized interpretation frameworks: Implement simplified approaches like the "1, 2, 3 method" (tracking glucose before and after meals) and "yes/less framework" for food choices, which help participants understand CGM data without technical expertise [41].

- Unified data platforms: Systems that automatically align dietary intake with glycemic responses eliminate redundant manual data entry and correlation analysis, significantly reducing the computational energy required for post-hoc analysis [41].

- Visual data integration: Combine CGM metrics with food intake timelines using intuitive graphics and simplified messaging to enhance comprehension while minimizing cognitive load and engagement time [41].

Q4: What technical support infrastructure ensures reliable operation of dietary monitoring applications in research settings?

A: A robust support system is essential for research continuity:

- Multi-channel support: Implement in-app chat for immediate assistance, email support for complex queries, and knowledge bases for self-service troubleshooting [43] [44].

- Prioritized response protocol: Categorize issues into critical (app functionality), important (user experience), and routine (general inquiries), with target response times of 15-30 minutes for critical issues [43].

- Proactive monitoring: Use analytics to identify common points of failure in the data recording workflow and implement preventive fixes through regular updates [44].

- Comprehensive training: Ensure research staff are proficient in both technical troubleshooting and communicating with participants about data recording challenges [43].

Experimental Protocols for Dietary Monitoring Research

Protocol 1: Validation of Mobile Application Against Traditional Dietary Assessment Methods

Objective: To compare nutrient intake data from mobile applications against 24-hour dietary recalls.

Methodology:

- Recruit participants meeting study criteria (e.g., n=33 as in Diet-A study) [39]

- Collect baseline demographic data and smartphone proficiency metrics

- Administer 24-hour dietary recalls pre-intervention by trained interviewers

- Implement mobile application with voice/text input and portion size selection

- Conduct post-intervention 24-hour dietary recalls

- Analyze differences using statistical methods (e.g., general linear model repeated measures)

Energy Efficiency Consideration: This protocol reduces long-term resource expenditure by validating the more scalable mobile method against the resource-intensive interview method.

Protocol 2: Integrated CGM and Nutrition Intervention Framework

Objective: To assess the impact of real-time CGM feedback on dietary quality and sleep efficiency.

Methodology (adapted from Basiri et al., 2025) [42]:

- Randomize participants to treatment (unblinded CGM) or control (blinded CGM) groups

- Provide individualized nutrition recommendations tailored to energy needs for both groups

- Equip treatment group with real-time CGM access with nutrition-focused interpretation guides

- Assess dietary intake using ASA24 recall or equivalent automated system

- Measure sleep quality through validated instruments

- Analyze outcomes via appropriate statistical tests with p<0.05 significance threshold

Energy Efficiency Consideration: This protocol leverages continuous automated glucose monitoring to reduce the need for frequent laboratory blood draws and manual dietary assessment.

Research Workflow Visualization

Dietary App Validation Workflow

CGM-Nutrition Integration Data Flow

Research Reagent Solutions

Table: Essential digital tools for dietary monitoring research

| Research Tool | Function | Research Application |

|---|---|---|

| MyFitnessPal | Extensive food database with barcode scanning and macro/micronutrient tracking [40] | Validation studies comparing app-generated data to traditional dietary assessment methods |